Introduction

As a Python developer, I often need to work with cloud services like AWS S3. However, relying on third-party services during development can introduce several challenges, such as the need to setup a cloud environment, associated costs, latency, and dependency on internet access. To address these issues, I tried MinIO, a powerful object storage solution that mimics the S3 API, enabling to simulate an S3 environment locally. In this article, I’ll walk through how to a quick set up MinIO with Python to try out the tool and then I’ll cover what MinIO is capable of.

Why Simulate S3 Locally?

Using a local S3 simulation (like MinIO) offers several benefits:

- Eliminate External Dependencies: By simulating S3 locally, you remove the need to rely on external cloud services during development, ensuring that your workflow is unaffected by internet issues or AWS outages.

- Reduce Costs: Running your own local S3 instance means you won’t incur costs for data storage or transfer on AWS, which is especially useful during the development and testing phases.

- Ease Development Cycles: Local simulation reduces network latency (due to local network or big files transfer), making your development cycle quicker as you no longer have to have to reach distant servers.

Prerequisites

- Python 3.7+

- MinIO (we’ll guide you through the installation)

- boto3 (the AWS SDK for Python, installable via pip install boto3)

Step 1: Installing and Configuring MinIO

1. Installing MinIO Locally

If you have already a working Python environment, using Docker is one of the easiest ways to get MinIO up and running. Here’s the command to do so:

docker run -p 9000:9000 -p 9001:9001 --name minio \

-e "MINIO_ROOT_USER=admin" \

-e "MINIO_ROOT_PASSWORD=password" \

minio/minio server /data --console-address ":9001"

This command starts a MinIO instance on your local machine, accessible at http://localhost:9000 for object storage and http://localhost:9001 for the admin console.

If you don’t, or if you want to have a dedicated environment to try out the content of this article, you can also use docker-compose to setup a complete container solution:

version: "3.9"

services:

python-app:

image: python:3.11-slim

local-s3-storage:

image: minio/minio

environment:

MINIO_ROOT_USER: "admin"

MINIO_ROOT_PASSWORD: "password"

ports:

- "9000:9000"

- "9001:9001"

volumes:

- minio_data:/data

command: server /data --console-address ":9001"

create-bucket:

image: minio/mc

depends_on:

- local-s3-storage

entrypoint: >

/bin/sh -c "

until (/usr/bin/mc alias set myminio http://minio:9000 admin password) do sleep 5; done &&

/usr/bin/mc mb myminio/transcribovox-videos

"

volumes:

minio_data: {}

NB: this is not production-ready, using unsafe credentials is on purpose for educational sake.

2. Accessing MinIO’s Web Interface

After starting MinIO, navigate to http://localhost:9000 in your web browser. Use the credentials (admin and password) you provided in the Docker command to log in. From here, you can create buckets to store your objects, just as you would with AWS S3.

Step 2: Integrating MinIO with Python

Now that MinIO is set up locally, we’ll configure a Python script to interact with it using the boto3 SDK, which is commonly used to interact with AWS services.

1. Setting Up a Python Script

Create a Python file, minio_example.py, and add the following code to interact with your local MinIO instance:

from boto3.session import Session

import boto3

from botocore.exceptions import NoCredentialsError

# MinIO configuration

MINIO_URL = "http://localhost:9000"

MINIO_ACCESS_KEY = "admin"

MINIO_SECRET_KEY = "password"

BUCKET_NAME = "my-bucket"

# Create a boto3 session

session = Session(

aws_access_key_id=MINIO_ACCESS_KEY,

aws_secret_access_key=MINIO_SECRET_KEY,

)

# S3 client

s3 = session.resource('s3', endpoint_url=MINIO_URL)

def upload_file(file_path, file_name):

try:

# Upload the file to MinIO

s3.Bucket(BUCKET_NAME).put_object(Key=file_name, Body=open(file_path, 'rb'))

print(f"File {file_name} uploaded successfully to {BUCKET_NAME}.")

except NoCredentialsError:

print("Error: Invalid credentials.")

def download_file(file_name, download_path):

try:

# Download the file from MinIO

obj = s3.Bucket(BUCKET_NAME).Object(file_name).get()

with open(download_path, 'wb') as f:

f.write(obj['Body'].read())

print(f"File {file_name} downloaded successfully to {download_path}.")

except s3.meta.client.exceptions.NoSuchKey:

print("Error: File not found.")

if __name__ == "__main__":

# Example usage

upload_file('path/to/your/local/file.txt', 'file.txt')

download_file('file.txt', 'path/to/download/location/file.txt')

This script will allow you to upload files to and download files from your local MinIO instance, mimicking how you would interact with AWS S3.

2. Running the Script

You can run the script using the command:

python minio_example.py

Upon running the script, it will:

- Upload a file from your local system to the MinIO bucket.

- Download a file from MinIO to a specified local path.

This provides a seamless experience, as if you were interacting with an actual S3 bucket on AWS, but without the need for a network connection or AWS account.

What are MinIO capabilities?

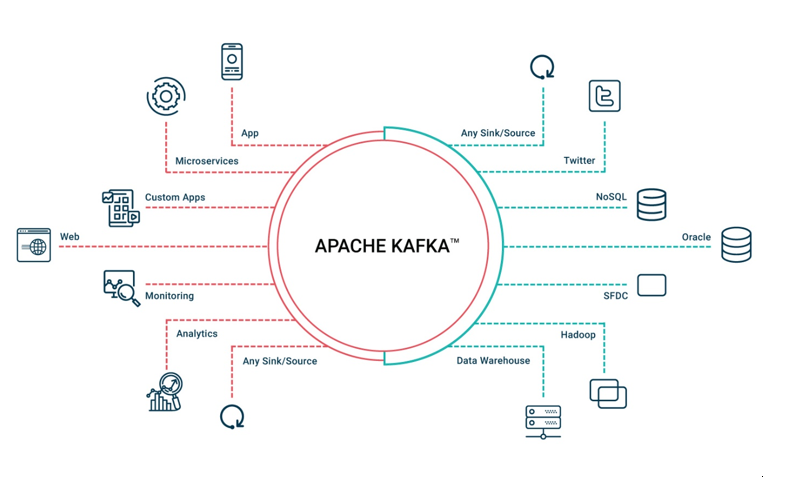

S3 API Compatibility: If you’re already working with AWS S3, you’ll feel right at home with MinIO. It implements the same API, so your existing code and tools will work seamlessly. This means no vendor lock-in and easy integration with your current workflows.

SDKs and CLI: MinIO provides SDKs for popular languages like Python, Java, Go, and JavaScript, making it easy to interact with the server programmatically. The mc command-line tool offers a convenient way to manage buckets, objects, and configurations.

Cloud-Native Design: MinIO is designed to run anywhere – on your laptop, in a container, on Kubernetes, or in the cloud. Its lightweight and containerized architecture makes it easy to deploy and scale.

Performance and Scalability: MinIO is optimized for high throughput and low latency, crucial for demanding applications. It can scale horizontally to handle massive datasets and high request volumes.

Data Protection: MinIO offers features like erasure coding and bitrot protection to ensure data durability. Encryption options are available for data at rest and in transit to meet your security requirements.

MinIO can be used in a production-ready solution with such uses cases in mind:

Microservices: Use MinIO as a persistent storage layer for your microservices architecture.

Machine Learning: Store and manage large datasets for training and deploying machine learning models.

Application Data: Store images, videos, logs, and other unstructured data generated by your applications.

DevOps and CI/CD: Integrate MinIO into your CI/CD pipelines for artifact storage and deployment.

Author : Michael LACROIX, Principal Consultant