With complex infrastructure, firewalls are often bothersome.

Is it our application or the network?

Powershell TcpListener class can help us.

Here is a sample script opening a specific port and printing incoming data along with connection information.

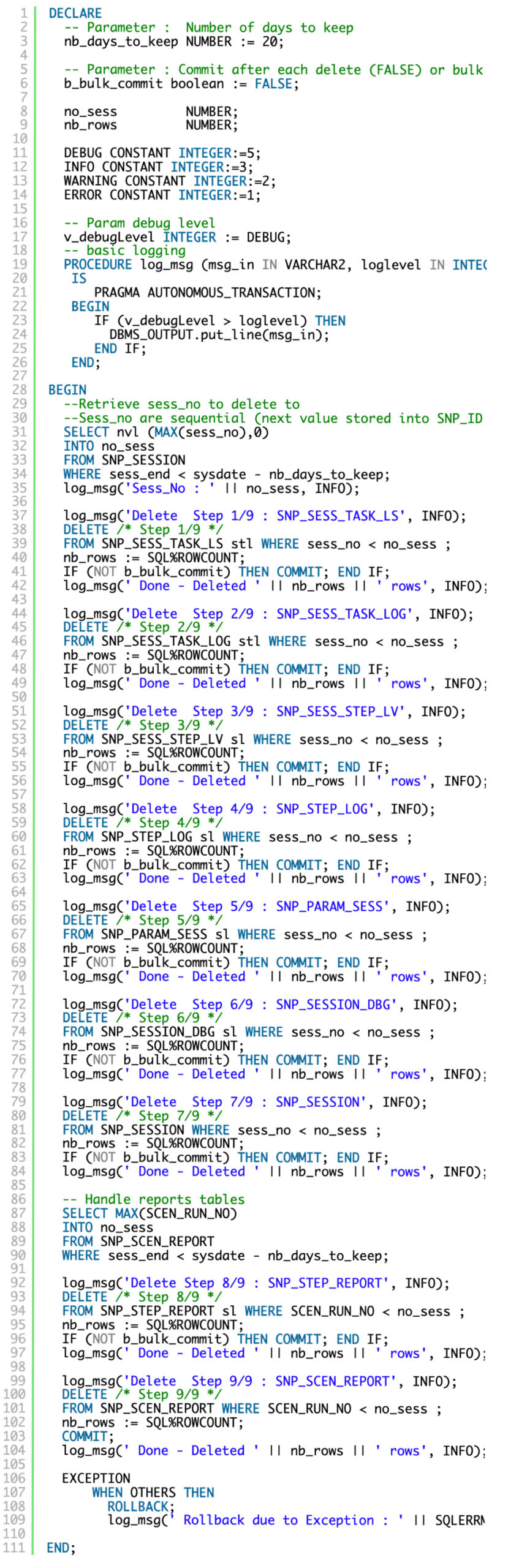

The Code

#region Hardcoded Parameters

$iPortToTest = 6666

#endregion

#region Internal Parameters

$_Listener

#endregion

#region Internal Function

Function Open-TCPPort {

[CmdletBinding()]

Param (

[Parameter(Mandatory=$true, Position=0)]

[ValidateNotNullOrEmpty()]

[int] $Port

)

Process {

Try {

# Start Listener

$endpoint = new-object System.Net.IPEndPoint([ipaddress]::any,$Port)

$listener = new-object System.Net.Sockets.TcpListener $endpoint

$listener.start()

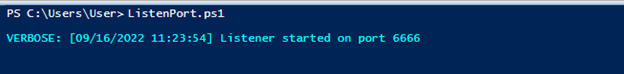

Write-Verbose ("[$(Get-Date)] Listener started on port {0} " -f $Port) -Verbose

Return $listener

}

Catch {

$mess = "Couldn't start listener : " + $Error[0]

Write-Error -Message $mess -ErrorAction Stop

}

}

}

Function Receive-TCPMessage {

[CmdletBinding()]

Param (

[Parameter(Mandatory=$true, Position=0)]

[ValidateNotNullOrEmpty()]

[System.Net.Sockets.TcpListener] $listener

)

Process {

Try {

# Accept connection

$data = $listener.AcceptTcpClient()

Write-Verbose ("[$(Get-Date)] New Connection from {0} Source port <{1}>" -f

$data.Client.RemoteEndPoint.Address, $Data.Client.RemoteEndPoint.Port) -Verbose

# Stream setup

$stream = $data.GetStream()

$bytes = New-Object System.Byte[] 1024

# Read Data from stream and write it to host

while (($i = $stream.Read($bytes,0,$bytes.Length)) -ne 0){

$EncodedText = New-Object System.Text.ASCIIEncoding

$data = $EncodedText.GetString($bytes,0, $i)

Write-Output $data

}

}

Catch {

$mess = "Receive Message failed with: `n" + $Error[0]

Write-Error -Message $mess -ErrorAction Stop

}

Finally {

# Close stream

$stream.close()

}

}

}

#endregion Internal Function

#----------------------------------------------

#region Main Block

#----------------------------------------------

$_Listener = Open-TCPPort($iPortToTest)

try{

while($true) {

try{

# Test for pending connection

if($_Listener.Pending()){

Receive-TCPMessage($_Listener)

}

Start-Sleep -Milliseconds 50

}

catch {

$mess = "Receive Message failed with: `n" + $Error[0]

Write-Error -Message $mess -ErrorAction Stop

}

}

}

finally{

$_Listener.stop()

}

#----------------------------------------------

#endregion Main Block

#----------------------------------------------

The usage

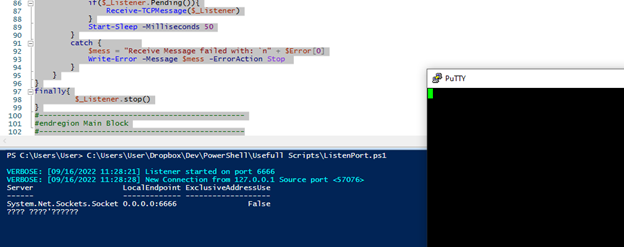

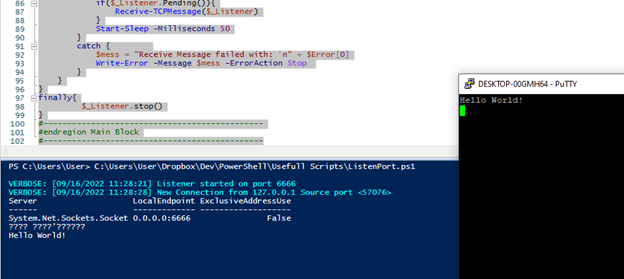

Set the port you want to listen to in script parameter: $iPortToTest.

Run the script. A message should display the port is now listening.

Connection request will be displayed (machine, source port and incoming data).

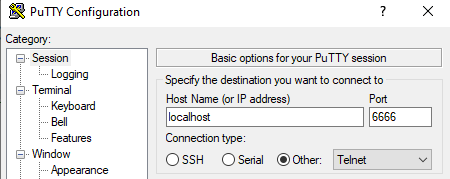

A simple test, in local using putty: